LangChain

Founded Year

2022Stage

Series B | AliveTotal Raised

$135MValuation

$0000Last Raised

$100M | 2 mos agoMosaic Score The Mosaic Score is an algorithm that measures the overall financial health and market potential of private companies.

+6 points in the past 30 days

About LangChain

LangChain specializes in the development of large language model (LLM) applications and provides a suite of products that support developers throughout the application lifecycle. It offers a framework for building context-aware, reasoning applications, tools for debugging, testing, and monitoring application performance, and solutions for deploying application programming interfaces (APIs) with ease. It was founded in 2022 and is based in San Francisco, California.

Loading...

ESPs containing LangChain

The ESP matrix leverages data and analyst insight to identify and rank leading companies in a given technology landscape.

The AI agent development platforms market offers solutions for enterprises to develop and launch AI agents based on foundation models. These platforms include frameworks and infrastructure to build AI agents that can execute tasks, answer queries, and automate workflows autonomously. Some vendors offer “drag-and-drop” interfaces and other no-code or low-code solutions that enable teams without in-…

LangChain named as Outperformer among 15 other companies, including OpenAI, Anthropic, and Cohere.

Loading...

Research containing LangChain

Get data-driven expert analysis from the CB Insights Intelligence Unit.

CB Insights Intelligence Analysts have mentioned LangChain in 8 CB Insights research briefs, most recently on Sep 5, 2025.

Sep 5, 2025 report

Book of Scouting Reports: The AI Agent Tech Stack

May 16, 2025 report

Book of Scouting Reports: 2025’s AI 100

Apr 24, 2025 report

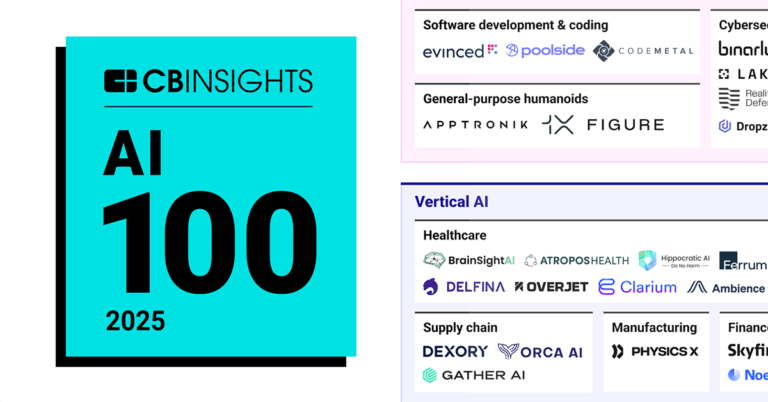

AI 100: The most promising artificial intelligence startups of 2025

Mar 6, 2025

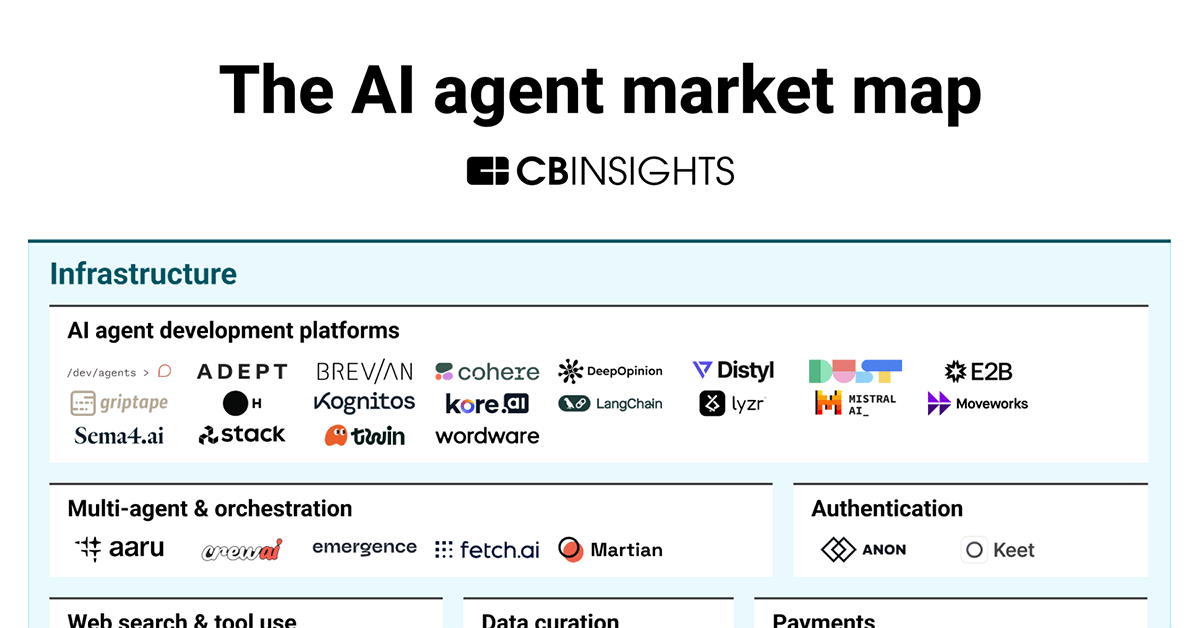

The AI agent market map

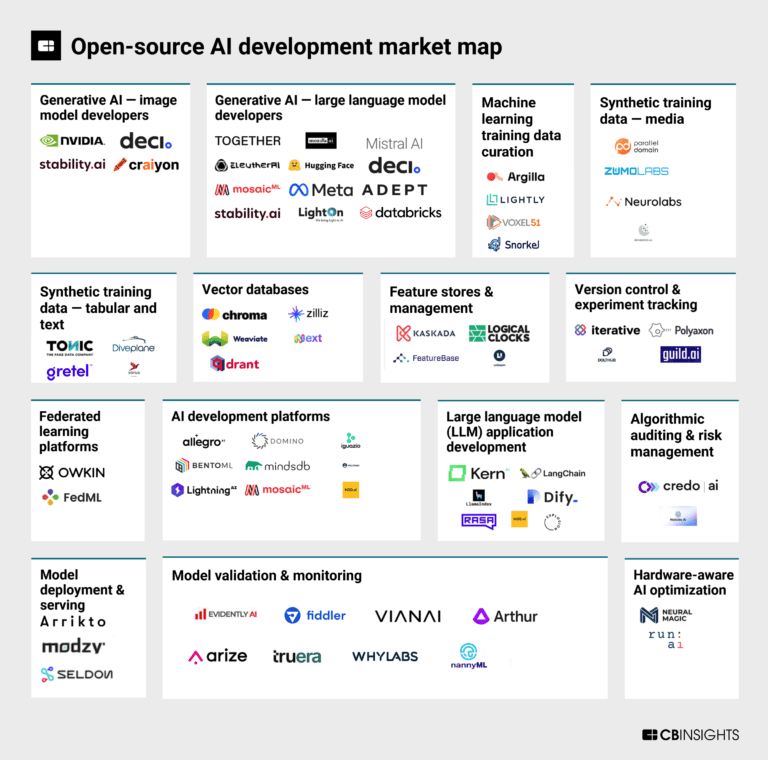

Oct 13, 2023

The open-source AI development market mapExpert Collections containing LangChain

Expert Collections are analyst-curated lists that highlight the companies you need to know in the most important technology spaces.

LangChain is included in 8 Expert Collections, including Unicorns- Billion Dollar Startups.

Unicorns- Billion Dollar Startups

1,286 items

AI 100 (All Winners 2018-2025)

200 items

Generative AI 50

50 items

CB Insights' list of the 50 most promising private generative AI companies across the globe.

Generative AI

2,793 items

Companies working on generative AI applications and infrastructure.

Artificial Intelligence

10,195 items

AI Agents & Copilots Market Map (August 2024)

322 items

Corresponds to the Enterprise AI Agents & Copilots Market Map: https://app.cbinsights.com/research/enterprise-ai-agents-copilots-market-map/

Latest LangChain News

Aug 28, 2025

DevOps.com AI Agent Onboarding is Now a Critical DevOps Function August 28, 2025 You wouldn’t throw a new engineer into production with no documentation, no context, and no support. Yet that’s exactly how many teams are treating AI agents. As autonomous systems and LLM-based agents begin taking on responsibilities once reserved for humans , their onboarding is emerging as a DevOps function just as vital as CI/CD, observability, or access control. AI agents aren’t magic. They need well-defined environments, scoped permissions, data pipelines, and feedback loops to succeed. If DevOps doesn’t take ownership of onboarding them, you’re not deploying intelligence — you’re releasing liability. AI Agents are Entering Production Faster Than You Think AI agents are already helping teams triage tickets, summarize logs, provision environments, run basic tests, write code snippets, and answer developer questions. But here’s the rub: most of them are being deployed without formal ownership . A developer experiments with an LLM wrapper, gets useful results, and suddenly it’s a critical part of the toolchain. No review. No plan. No infrastructure. That’s a problem. Once an agent starts interacting with your real systems, the risks escalate quickly. Is it rate-limited? Does it have access to sensitive environments? What happens when it fails silently or starts making invalid assumptions based on outdated context? Who logs the output? Who reviews the audit trail? These aren’t academic questions. Teams are already running into issues where agents overwrite configs, cause noisy retries, or misroute customer support requests—not because they’re flawed, but because nobody treated their onboarding as a formal deployment. Remember the Replit.ai incident with the deleted database? Exactly? For better or for worse, DevOps is the only team with the discipline and systems thinking to treat AI agents as first-class deployable units. Without that lens, you’re building a house of cards with great demos and terrible ops. Treat Agents Like New Engineers — Because They Are If a junior engineer joined your team tomorrow, would you give them root access and hope for the best? Of course not. You’d give them a sandbox, read-only access to production logs, limited write privileges, a checklist of onboarding tasks, and clear escalation paths . They need structured inputs, constrained actions, guardrails, monitoring, and progressive trust. They need to be versioned, tracked, and retrained when the environment changes. You need to know which model version is responsible for which action, and be able to reproduce its decision path. DevOps teams already do this for humans. They use identity and access management (IAM), canary deployments, secret rotation, observability stacks, alerting rules, and incident response playbooks. Those same tools should be extended to agents. Onboarding is an Operational Contract Too many orgs assume that because LLMs are pretrained, they don’t need onboarding. Wrong. You’re not training the model, you’re configuring the agent. Onboarding is the moment where an AI system stops being a general-purpose model and starts being a specific actor in your environment. That involves: Connecting it to the right APIs and systems Defining the scope of what it should and shouldn’t do Providing context, it can be referenced reliably If the system changes, the agent needs retraining or re-prompting. If the security policies evolve, its access needs auditing. If an outage occurs, it needs logging hooks and rollback support. DevOps already knows how to build and maintain contracts between services, infrastructure, and teams. The same rigor should apply to agents. If your onboarding process for a human engineer takes three weeks, but your onboarding for an AI agent is a Slack message with an API key, you’re ignoring the operational debt you’re accumulating. And it will come due. Observability and Feedback Loops are Non-Negotiable Deploying an agent without observability is like flying blind in a storm. You need to know what it did, why it did it, and what the outcome was—in real time. That means structured logs, traceable decision paths, and dashboards that capture the agent’s behavior over time. Not just success/failure metrics, but patterns: How often does it repeat tasks? Where does it hesitate? What data sources lead to hallucinations, and what conditions exist when that data is being extracted ? Even more critical: you need feedback loops. Human-in-the-loop review, reinforcement learning from human feedback (RLHF), or at the very least, a way to flag and annotate failures. An agent that never receives feedback is an agent that will never improve. Just like CI/CD systems rely on test coverage, linting, and rollbacks, AI agents need regression checks. Changes to prompts, context, or dependencies should be tested in pre-prod, versioned, and approved before rollout. And yes, rollback mechanisms should exist if something goes sideways. You can’t control everything a generative agent might say or do. But you can control the infrastructure it operates in. Observability turns black-box behavior into manageable risk. DevOps Should Own the Agent Lifecycle The AI agent lifecycle isn’t a hackathon project anymore. It’s a real deployment pipeline. That pipeline needs owners, automation, and safeguards. DevOps should own that lifecycle. That includes staging, testing, promoting, and retiring agents just like any other service. It includes documenting context sources, prompt templates, version numbers, and access patterns. It includes maintaining CI/CD pipelines for updates, retraining, and observability checks. You wouldn’t let a new API go to prod without a runbook and incident policy. Why should an agent be any different? This shift is already underway. Platforms like LangChain, AutoGen and Microsoft’s Autogen Studio are bringing software engineering discipline to the agent space. But platform features alone don’t guarantee maturity, despite all the fears about DevOps specialists being one of the professions that’ll disappear due to AI . More than just the operational part, DevOps is the cultural layer that ensures tools are used responsibly. As generative systems become persistent actors in cybersecurity and infrastructure in general , they deserve lifecycle management, not just one-off deployments. Ownership isn’t optional. The risks are too high. Closing Thoughts DevOps is now about shaping how intelligent agents interact with systems, data, and people. That means new responsibilities—but also new leverage. Done right, agent onboarding becomes a competitive advantage. Teams that treat agents as real actors, with structured onboarding, scoped permissions, and rigorous observability, will ship faster and break fewer things. They’ll identify hallucination risks before they hit customers. They’ll iterate on prompts with the same precision they bring to tests. What’s more, if your DevOps team isn’t planning how to onboard and manage AI agents today, you’re already behind. Start building the scaffolding now. Because your next teammate might not be human — but they’ll still need your help to succeed.

LangChain Frequently Asked Questions (FAQ)

When was LangChain founded?

LangChain was founded in 2022.

Where is LangChain's headquarters?

LangChain's headquarters is located at 42 Decatur Street, San Francisco.

What is LangChain's latest funding round?

LangChain's latest funding round is Series B.

How much did LangChain raise?

LangChain raised a total of $135M.

Who are the investors of LangChain?

Investors of LangChain include Institutional Venture Partners, Sequoia Capital, Benchmark, Lux Capital, Abstract and 5 more.

Who are LangChain's competitors?

Competitors of LangChain include OpenPipe, FlowiseAI, Humanloop, Lyzr, LlamaIndex and 7 more.

Loading...

Compare LangChain to Competitors

Cohere operates as an enterprise artificial intelligence (AI) company building foundation models and AI products across various sectors. The company offers a platform that provides multilingual models, retrieval systems, and agents to address business problems while ensuring data security and privacy. Cohere serves financial services, healthcare, manufacturing, energy, and the public sector. It was founded in 2019 and is based in Toronto, Canada.

Dify operates as a platform for developing generative artificial intelligence (AI) applications within the technology industry. The company provides tools for creating, orchestrating, and managing artificial intelligence (AI) workflows and agents, using large language models (LLMs) for various applications. Dify's services are designed for developers who wish to integrate artificial intelligence (AI) into products through visual design, prompt refinement, and enterprise operations. It was founded in 2023 and is based in San Francisco, California.

Fireworks AI specializes in generative artificial intelligence platform services, focusing on inference and model fine-tuning within the artificial intelligence sector. The company offers an inference engine for building production-ready AI systems and provides a serverless deployment model for generative AI applications. It serves AI startups, digital-native companies, and Fortune 500 enterprises with its AI services. It was founded in 2022 and is based in Redwood City, California.

CrewAI develops technology related to multi-agent automation within the artificial intelligence sector. The company provides a platform for building, deploying, and managing AI agents that automate workflows across various industries. Its services include tools, templates for development, and tracking and optimization of AI agent performance. The company was founded in 2024 and is based in Middletown, Delaware.

LlamaIndex specializes in building artificial intelligence knowledge assistants. The company provides a framework and cloud services for developing context-augmented AI agents, which can parse complex documents, configure retrieval-augmented generation (RAG) pipelines, and integrate with various data sources. Its solutions apply to sectors such as finance, manufacturing, and information technology by offering tools for deploying AI agents and managing knowledge. LlamaIndex was formerly known as GPT Index. It was founded in 2023 and is based in Mountain View, California.

Stack AI offers enterprise Artificial Intelligence (AI) solutions, focusing on the deployment of AI agents and workflow automation across sectors. Its offerings include a no-code platform for building and deploying AI applications, such as AI agents and chatbots, aimed at improving back-office processes. Its services are applicable in industries including healthcare, finance, education, and government, with attention to data security and compliance. It was founded in 2022 and is based in Cambridge, Massachusetts.

Loading...