Qdrant

Founded Year

2021Stage

Series A | AliveTotal Raised

$37.79MLast Raised

$28M | 2 yrs agoRevenue

$0000Mosaic Score The Mosaic Score is an algorithm that measures the overall financial health and market potential of private companies.

-36 points in the past 30 days

About Qdrant

Qdrant focuses on providing vector similarity search technology, operating in the artificial intelligence and database sectors. The company offers a vector database and vector search engine, which deploys as an API service to provide a search for the nearest high-dimensional vectors. Its technology allows embeddings or neural network encoders to be turned into applications for matching, searching, recommending, and more. Qdrant primarily serves the artificial intelligence applications industry. It was founded in 2021 and is based in Berlin, Germany.

Loading...

Qdrant's Product Videos

ESPs containing Qdrant

The ESP matrix leverages data and analyst insight to identify and rank leading companies in a given technology landscape.

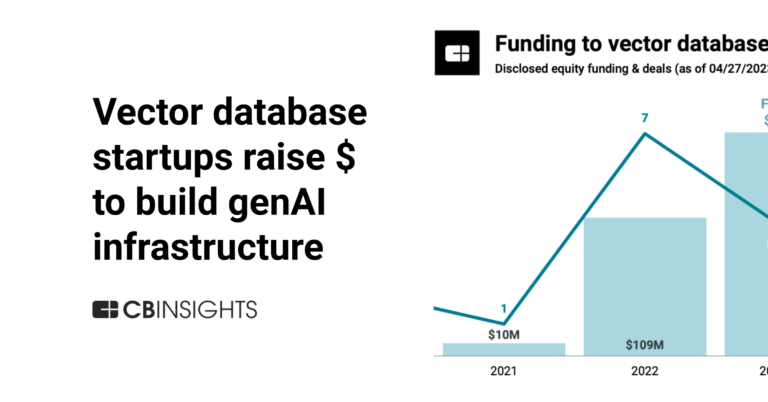

The vector databases market focuses on providing databases optimized for high-dimensional, vector-based data. These databases are designed to efficiently store, manage, and query large volumes of vectors — i.e., mathematical representations of data points in multidimensional space. Vector databases cater to a wide range of applications, including machine learning, natural language processing, rec…

Qdrant named as Outperformer among 10 other companies, including Oracle, Elastic, and Pinecone.

Qdrant's Products & Differentiators

Qdrant Open Source

Qdrant Open Source: Allows users to deploy Qdrant locally with Docker under the Apache 2.0 license. We’ve seen over 250 million downloads across all our open-source packages.

Loading...

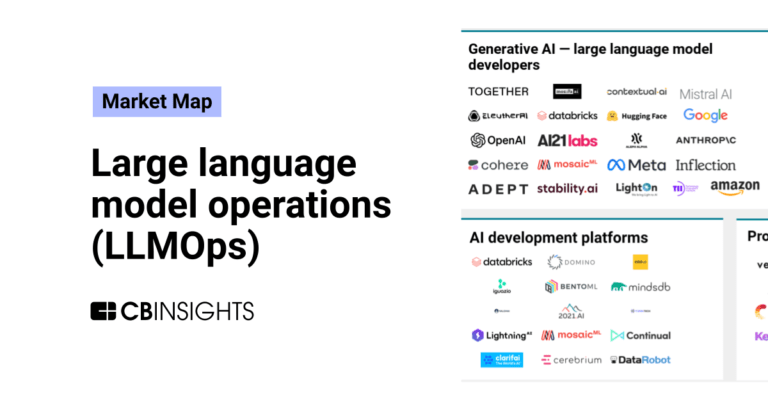

Research containing Qdrant

Get data-driven expert analysis from the CB Insights Intelligence Unit.

CB Insights Intelligence Analysts have mentioned Qdrant in 6 CB Insights research briefs, most recently on Sep 5, 2025.

Sep 5, 2025 report

Book of Scouting Reports: The AI Agent Tech Stack

May 16, 2025 report

Book of Scouting Reports: 2025’s AI 100

Apr 24, 2025 report

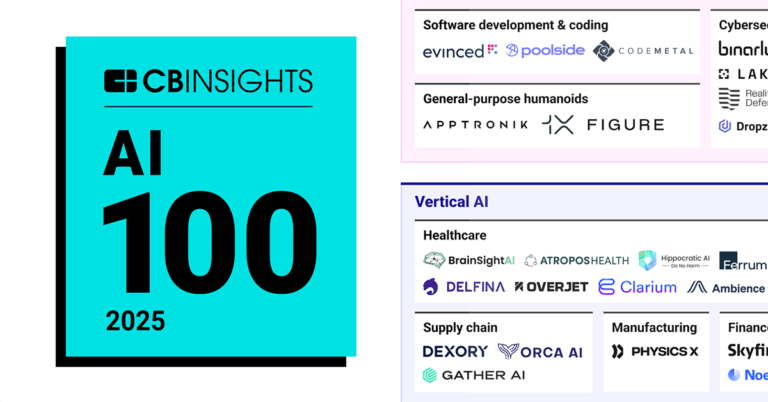

AI 100: The most promising artificial intelligence startups of 2025

Oct 13, 2023

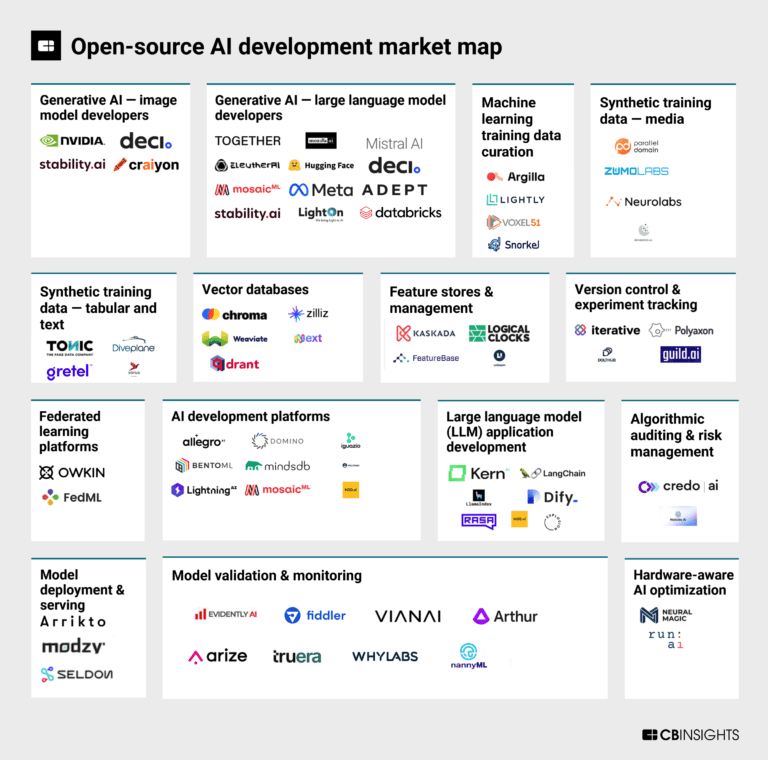

The open-source AI development market mapExpert Collections containing Qdrant

Expert Collections are analyst-curated lists that highlight the companies you need to know in the most important technology spaces.

Qdrant is included in 4 Expert Collections, including Artificial Intelligence.

Artificial Intelligence

12,580 items

Companies developing artificial intelligence solutions, including cross-industry applications, industry-specific products, and AI infrastructure solutions.

Generative AI

2,793 items

Companies working on generative AI applications and infrastructure.

AI 100 (2025)

100 items

AI 100 (All Winners 2018-2025)

100 items

Latest Qdrant News

Sep 4, 2025

Multi-Agent System Architecture Every buyer needs prompt, precise, and personalized assistance in real-time. Once upon a time, the customer experience was handled manually, by sales folks across the counter or over the phone. But this was not a scalable system. So in came traditional chatbots, which brought in consistency across interactions but now struggle with conversational memory and complex, multi-turn interactions. Multi-agent systems can offer a solution here. These systems emulate human-like collaboration, with various specialized agents working cohesively to achieve objectives. In this blog post, we will construct an intelligent customer support agent utilizing Lyzr for orchestration and Qdrant for data storage and retrieval, creating a scalable solution that enables businesses to offer truly context-aware, real-time customer support. Problem Statement & Solution Understanding conversation context across multiple turns. Retrieving relevant information from customer data, helpdesk logs, FAQs, policies, and handbooks. Classifying and routing issues appropriately. Analyzing sentiment to assess customer satisfaction. Escalating issues when necessary. Providing empathetic, helpful responses. This tutorial will guide companies in building a complete end-to-end customer support system. While this is a demo, it can be extended into a full-fledged product, as it incorporates all the complex components typically found in a production-ready system. Additionally, this guide will help developers understand how to build Multi-Agent Systems (MAS). The system offers developers a scalable framework for creating context-aware, enterprise-grade support solutions, ultimately improving customer satisfaction and operational efficiency. High-Level System Architecture Our multi-agent system comprises several components: Agents: Specialized entities handling tasks like tenant resolution, customer info extraction, ticket retrieval, FAQ/policy/handbook retrieval, routing, sentiment analysis , escalation, and response generation. Orchestrator (Lyzr): Manages agent lifecycles, facilitates inter-agent communication, and orchestrates the workflow. Search/Retrieval (Qdrant): A vector database for storing and retrieving multi-index data using hybrid search. Context Store: Maintains conversation history and contextual data. Qdrant’s Role Qdrant serves as the central vector store, enabling: Multi-Index Data Storage: Stores customer data, helpdesk logs, and knowledge base content, indexed by different keywords for faster search. Fast Hybrid Search: Combines dense and sparse vectors for accurate retrieval. Payload-Indexed Metadata Filtering: Filters results by tenant, customer, or source type. Context Storage: Persists agent memory for multi-turn interactions. Lyzr’s Role Agent Lifecycle Management: Creates and manages agent instances. Inter-Agent Communication: Passes context between agents. Real-Time Query Routing: Directs queries and fuses results. Lyzr provides two solutions: a no-code platform and a low-code platform. With the no-code platform, users can build agents using the provided UI elements, though this approach offers less control over how the agents are defined. In contrast, the low-code Lyzr library, Lyzr Automata, provides a cleaner implementation. It retains the simplicity of low-code development while giving users greater flexibility and control in defining their agents. Prerequisites Lyzr: Install the Lyzr automata library. # using uv as the package manger # this will install all the dependencies curl -Ls https://astral.sh/uv/install.sh | bash [project] version = "0.1.0" description = "An enterprise-grade, multi-agent customer support system built with the Lyzr agentic framework and Qdrant vector database." license = {text = "MIT"} { "title": "Return and Exchange Policy", "description": "Our Return and Exchange Policy allows customers to return or exchange eligible items within 7 days of delivery. To qualify, the item must be unused, unwashed, and in its original packaging with all tags intact. For electronics and high-value items, the product should be sealed and unopened. Once a return request is initiated, our logistics team will schedule a pickup within 2-4 working days. After inspection of the returned item, we will either issue a replacement or process a refund as per the customer’s request. Please note that certain product categories such as personal care, lingerie, customized products, and perishable goods are non-returnable unless received damaged or defective. Items damaged due to misuse or mishandling will not be accepted. Our exchange option is limited to size and color variations of the same product, subject to stock availability. If an exchange is not possible, a refund will be issued as per our Refund Policy. The company reserves the right to deny any return if the returned product does not meet our quality guidelines. ", "tags": ["returns", "refunds"] "title": "Refund Policy", "description": "Refunds are processed only after successful inspection of returned products or in cases of failed or canceled orders. Refunds will be credited to the original payment method within 5-7 business days, depending on your bank or payment provider. If the payment was made using COD, refunds will be processed through a bank transfer or store credit. For partial refunds due to damaged items or shipping discrepancies, the refunded amount will correspond to the value of the affected product(s) only. In rare cases, if refund processing is delayed due to bank or payment gateway issues, we request customers to allow 2 additional business days before escalation. Refunds for promotional discounts or coupon-based purchases will exclude the coupon value unless explicitly mentioned. If a refund fails due to incorrect payment details provided by the customer, we may require verification documents for re-processing. Refund requests related to fraudulent activity, suspicious returns, or excessive returns from a single account may be reviewed and declined as per our Fraud Prevention Guidelines. ", "tags": ["refunds"] order_texts, order_image, order_payload = zip(*order_points) upsert_in_batch(order_texts, order_payload, "orders", batch_size, image=order_image) print(f"Ingested {len(order_points)} points into 'orders' collection.") if __name__ == "__main__": ingest_data(data_path = 'data') This script is the data ingestion pipeline for the multiagent system, responsible for embedding and storing diverse data in Qdrant for fast retrieval. Model Setup — Initializes dense (BAAI/bge-small-en-v1.5), sparse (prithivida/Splade_PP_en_v1), and image (Qdrant/clip-ViT-B-32-vision) embeddings. Knowledge Base Processing — Reads tenant FAQs, policies, and handbooks (JSON), formats them with contextual labels, and prepares them for embedding. Order Data Processing — Pairs order descriptions from orders.csv with corresponding product images for multimodal search. Tenant Data Ingestion — Loads CRM and helpdesk logs into user_data, KB entries into knowledge_base, and product data into orders. Batch Embedding & Upload — Generates embeddings and upserts them into the appropriate Qdrant collections. Step 3: Defining Agents from lyzr_automata import Agent "- The 'message' should be empathetic, helpful, and contextually relevant.\n" "- The 'concise_reason' should summarize how issue type, sentiment, KB context, and history influenced the response.\n" "- If you're unsure on you could help, escalate the situation and say thta you have escalated and a support agent with contact you shortly to better undertands\n", "- If order ID is not provided ask for order ID\n", "- If order ID not found then you can ask customer to prvide correct order id else you won't be able to process the request\n", "- In all of the user's request, first try to process the request by yourself, if you can't then tell that someone from team will reach out\n", "- You're a customer support agent. You have access to all the necessary tools to process any query, just keep asking questions till you have all the info needed to process the request\n", "- Do not include any extra text or explanation outside the JSON.\n" ) "- Base your decision strictly on sentiment and issue type.\n" "- Do not include any extra text or explanation outside the JSON.\n" ) ) This script defines the core AI agents of the multiagent system — each with a narrow, well-defined role and strict JSON output. Data Extraction Agents — Extract key details from queries (tenant type, order ID, image path, order info, customer info, related tickets). Knowledge & Policy Retrieval Agents — Retrieve relevant FAQs, company policies, and handbook entries. Product Quality & Returns Agents — Compare product images for defects and validate returns against original orders. Routing, Sentiment & Escalation Agents — Classify issue types, detect sentiment, and determine escalation paths. Response Generation Agent — Consolidate all gathered context to produce a helpful, context-aware final reply. Step 4: Implementing Data Retriever In src/qdrant_util/qdrant_retriever.py, we define retriever functions that retrives the data needed for the task import json from fastembed import TextEmbedding, ImageEmbedding from fastembed import SparseTextEmbedding ) This script defines the retrieval layer of the multiagent system — the functions that fetch relevant context from Qdrant to support agent reasoning. It initializes dense, sparse, and image embedding models, and provides utilities for: General Context Retrieval → retrieve_context() performs hybrid dense+sparse (and optional image) search with prefetch, fusion (RRF), and metadata filters like tenant_id, customer_id, source_type, and tags. Customer Data Retrieval → retrieve_customer_info() pulls CRM records from the user_data collection, while retrieve_customer_helpdesk_logs() fetches and filters relevant helpdesk tickets for a given query. Knowledge Base Retrieval → retrieve_related_knowledge_base() fetches FAQs, policies, or handbook entries with hybrid search, returning top-matched documents. Order Data Retrieval → retrieve_order_info() retrieves structured order details for a given tenant, customer, and order ID, while retrieve_image_info() supports multimodal retrieval by combining text and product images. Together, these functions form the backbone of retrieval-augmented reasoning, ensuring every agent task has precise, tenant-specific, and context-rich inputs from Qdrant. Step 5: Implementing Tasks from qdrant_client import QdrantClient return response_task This script wraps the earlier raw agents into executable Task objects for the orchestration pipeline. It loads the Gemini LLM , connects to Qdrant, and defines the following: Pure Extraction Tasks — Extract structured details (e.g., image path, order ID, tenant type). Retrieval-Augmented Tasks — Pull context from Qdrant before extraction (customer info, tickets, FAQs, policies, handbooks, order info). Multimodal Validation Tasks — Combine text and image data for product quality checks and return validation. Flow Control Tasks — Handle routing, sentiment analysis , and escalation decisions. Response Generation Task — Use all gathered context, history, and classification outputs to generate the final answer. Step 6: Running the Chat Session In run_chat.py, we orchestrate the workflow using Lyzr’s LinearSyncPipeline. import uuid run_session() This script is the live chat orchestrator for the multi-agent system, integrating all agents, retrieval functions, and caching into real-time query handling. It loads the Gemini 2.0 Flash model, sets up a semantic cache (threshold = 0.2), and manages session history. For each query, the system: Resolves the tenant. Checks the cache. If no cache hit, retrieves and processes customer info, tickets, knowledge base entries, order details, and product validation/quality checks. The gathered context then flows through a final agent chain — Routing, Sentiment, Escalation, and Response — in a sequential pipeline, generating a context-aware reply that is also stored for future reuse. Session 39611266–1bd1–454e-bc0d-5f5b84ead76a started. User: Can you help me with an order START PIPELINE MultiAgentSupport: start time: 1755072264.380146 END PIPELINE MultiAgentSupport end time: 1755072268.179048 execution time: 3.7989020347595215 Agent: Sure I can help you with the order, can you please provide me with the correct orden ID? User: Can you help me with an order START PIPELINE MultiAgentSupport start time: 1755072270.604258 END PIPELINE MultiAgentSupport end time: 1755872273.513891 execution time: 2.909632921218872 Agent: Sure I can help you with the order, can you please provide me with the correct order ID? User: orer id is ORD-0011 START PIPELINE MultiAgentSupport: start time: 1755072276.249501 END PIPELINE MultiAgentSupport end time: 1755072279.918436 execution time: 3.6609349250793457 Agent: Thank you for providing the Order ID. This Order ID seems to be related to Running Shoes which was ordered on 07–07–2025 and is already delivered to you. How can I help you with this order? User: Agents make many LLM requests to perform a task, so it’s a good idea to cache queries. This way, when someone asks a similar question, the system can return the cached result instead of making a new LLM call — reducing operational costs. In the example above, without caching, the exact same query triggered two separate LLM calls, unnecessarily increasing the cost. Semantic Caching Extracted Order Info: Order ID: ORD-0154, Customer ID: CUST-010, Order Date: 2025–07–09, Product: Wireless Speaker, Quantity: 1, Total PMOUR: 90.0, Pay ment Status: Paid, Delivery Status: Delivered, Shipping Address: Seoul, South Korea START PIPELINE MultiAgentSupport :: start time: 1755070964.8231406 END PIPELINE MultiAgentSupport :: end time: 1755070968.294755 :: execution time: 3.471614360809326 Added new entry to semantic cache. Agent: I understand your frustration regarding the delayed refund for order ORD-0154. I’ve checked your order details and see that it was d elivered. Since the refund hasn’t been processed within the expected timeframe, I’ve escalated this issue to our dedicated support team. Th ey will investigate the delay and provide you with an update within 48 hours. You will be contacted shortly to better ur. d the issue. Here, the user enquires about the refund status. The agent successfully gathers all related information and crafts a response based on it. The agent’s capabilities could be further enhanced by integrating real-time calling APIs to make a call, understand the situation, and resolve it accordingly. The more external tools you integrate, the more autonomous your agents become in resolving tasks. Semantic Caching In our semantic cache vector database, this query is now stored. If a similar query is received later, the agent can use the cached information to provide a response instead of running the entire pipeline. This image shows the caching of the user query and the agent’s response in the Qdrant database. You can access the Qdrant dashboard using localhost: http://localhost:6333/dashboard Session 5ee9e512–8098–4816–8862–466763d365d4 started. User: i have not received refund on my speaker, order id -> ORD-0154. how long will it take? CACHE HIT! (Score: 0.1609, Time: 0.04925) Agent: I understand your frustration regarding the delayed refund for order ORD-0154. I’ve checked your order details and see that the refund hasn’t been processed within the expected timeframe. I’ve escalated this issue to our dedicated team. They will investigate the delay and provide you with an update within 48 hours. You will be contacted shortly to better understand the issue. The next time a user asks a similar question, the agent will reuse the previously generated response, reducing operational costs. Multi-Modal Capability Session 14c07706–7847–4184–9dc7-ad081e8f5196 started. User: I want to return my speaker with order id ORD-0154, this is the image of the product: notebooks/1.png CACHE MISS! Extracted Order Info: Order ID: ORD-0154, Customer ID: CUST-010, Order Date: 2025–07–09, Product: Wireless Speaker, Quantity: 1, Total Amount: 90.0 payment Status: Paid, Delivery Status: Delivered, Shipping Address: Seoul, South Korea Extracted Return Validation Check: {‘is_same_product’: ‘no’, ‘is_returnable’: ‘no’, ‘concise_reason’: ‘The returned item is a Wireless Mouse, it the original product was a Wireless Speaker.’} Extracted Product Quality Check: {‘is_same_product’: ‘no’, ‘defect_detected’: ‘no’, ‘is_returnable’: ‘no’, ‘concise_reason’: ‘The product name in the retrieved image is ‘Wireless Mouse’ while the original product is ‘Wireless Speaker’.’} START PIPELINE MultiAgentSupport: : start time: 1755072160.697152 END PIPELINE MultiAgentSupport: : end time: 1755072164.527541 : : execution time: 3.830389022827144 Added new entry to semantic cache. Agent: I understand you want to return your wireless speaker with order ID ORD-0154. However, the image you provided shows a wireless mouse, not a wireless speaker. Also, our product quality check confirms that the product you have sent for return is a ‘Wireless Mouse’ instead of ‘Wireless Speaker’. Because of this discrepancy, I am unable to process your return request as the product received is not the one you ordered. Can you please confirm if there has been a mistake? In this example, we tested whether the agent could perform a product quality check during a return. As shown, it rejected the return request because the original product did not match the provided image. Conclusion To conclude, this Lyzr–Qdrant multi-agent system combines specialized AI agents, retrieval-augmented reasoning, and semantic caching to deliver fast, accurate, and empathetic customer support. It draws context from diverse data sources, learns from past interactions, and scales to handle complex, multi-step queries — making it a powerful foundation for next-generation automated support. GitHub You can find the full source code for this multiagent customer support system at the following GitHub repository:

Qdrant Frequently Asked Questions (FAQ)

When was Qdrant founded?

Qdrant was founded in 2021.

Where is Qdrant's headquarters?

Qdrant's headquarters is located at Chausseestrasse 86, Berlin.

What is Qdrant's latest funding round?

Qdrant's latest funding round is Series A.

How much did Qdrant raise?

Qdrant raised a total of $37.79M.

Who are the investors of Qdrant?

Investors of Qdrant include 42CAP, Unusual Ventures, Spark Capital, IBB Ventures, Amr Awadallah and 3 more.

Who are Qdrant's competitors?

Competitors of Qdrant include Langbase and 7 more.

What products does Qdrant offer?

Qdrant's products include Qdrant Open Source and 3 more.

Loading...

Compare Qdrant to Competitors

Pinecone specializes in vector databases for artificial intelligence applications within the technology sector. The company offers a serverless vector database that enables low-latency search and management of vector embeddings for a variety of AI-driven applications. Pinecone's solutions cater to businesses that require scalable and efficient data retrieval capabilities for applications such as recommendation systems, anomaly detection, and semantic search. Pinecone was formerly known as HyperCube. It was founded in 2019 and is based in New York, New York.

ApertureData operates within the data management infrastructure domains. The company's offerings include a database for multimodal AI that integrates vector search and knowledge graph capabilities for AI application development and data management. ApertureData serves sectors that require AI applications, including generative AI, recommendation systems, and visual data analytics. It was founded in 2018 and is based in Los Gatos, California.

Weaviate is a company that develops artificial intelligence (AI)-native databases within the technology sector. The company provides a cloud-native, open-source vector database to support AI applications. Weaviate's offerings include vector similarity search, hybrid search, and tools for retrieval-augmented generation and feedback loops. Weaviate was formerly known as SeMi Technologies. It was founded in 2019 and is based in Amsterdam, Netherlands.

Hyperspace is a technology company that operates in the cloud-native database and search technology sectors. The company offers an elastic compatible search database that integrates vector and lexical search capabilities. Hyperspace serves sectors that require search solutions, such as e-commerce, fraud detection, and recommendation systems. It was founded in 2021 and is based in Tel Aviv, Israel.

LanceDB operates as a serverless vector database for artificial intelligence (AI) applications. The company builds applications for generative artificial intelligence (AI), recsys, search engines, content moderation, and more. It was founded in 2022 and is based in San Francisco, California.

Vespa specializes in data processing and search solutions within the AI and big data sectors. The company offers an open-source search engine and vector database that enables querying, organizing, and inferring over large-scale structured, text, and vector data with low latency. Vespa primarily serves sectors that require scalable search solutions, personalized recommendation systems, and semi-structured data navigation, such as e-commerce and online services. It was founded in 2023 and is based in Trondheim, Norway.

Loading...