Gretel

Founded Year

2020Stage

Acquired | AcquiredTotal Raised

$65.5MRevenue

$0000About Gretel

Gretel is a company that provides a synthetic data platform for artificial intelligence applications across various industries. The company generates synthetic datasets, transforms data quality, and supports the training of AI models while maintaining privacy. Gretel serves sectors that require data privacy and security, including finance, healthcare, and the public sector. It was founded in 2020 and is based in San Diego, California. In March 2025, Gretel was acquired by NVIDIA.

Loading...

Gretel's Product Videos

ESPs containing Gretel

The ESP matrix leverages data and analyst insight to identify and rank leading companies in a given technology landscape.

The synthetic patient data platforms market offers artificially generated data intended to imitate real patient information. Healthcare organizations can use synthetic patient data to overcome challenges related to data privacy, security, and limited access to real patient data. Synthetic patient data provides a safe and compliant alternative for testing, research, and training purposes while pres…

Gretel named as Leader among 7 other companies, including Aetion, Segmed, and MDClone.

Gretel's Products & Differentiators

Gretel Synthetics

The most advanced privacy API for synthetic data. Apply differentially private learning to create highly accurate, synthetic data with enhanced privacy guarantees.

Loading...

Research containing Gretel

Get data-driven expert analysis from the CB Insights Intelligence Unit.

CB Insights Intelligence Analysts have mentioned Gretel in 10 CB Insights research briefs, most recently on Apr 11, 2025.

Mar 26, 2025

Nvidia’s next big bet? Physical AI

Feb 20, 2024

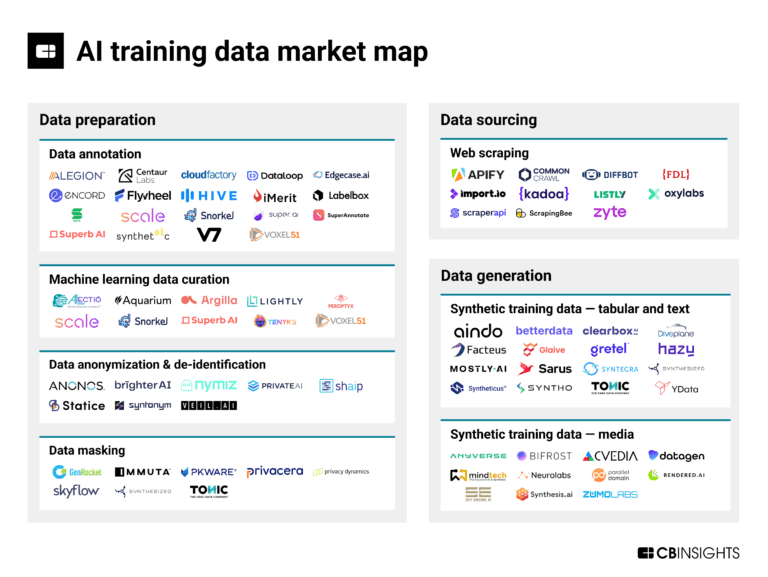

The AI training data market map

Oct 13, 2023

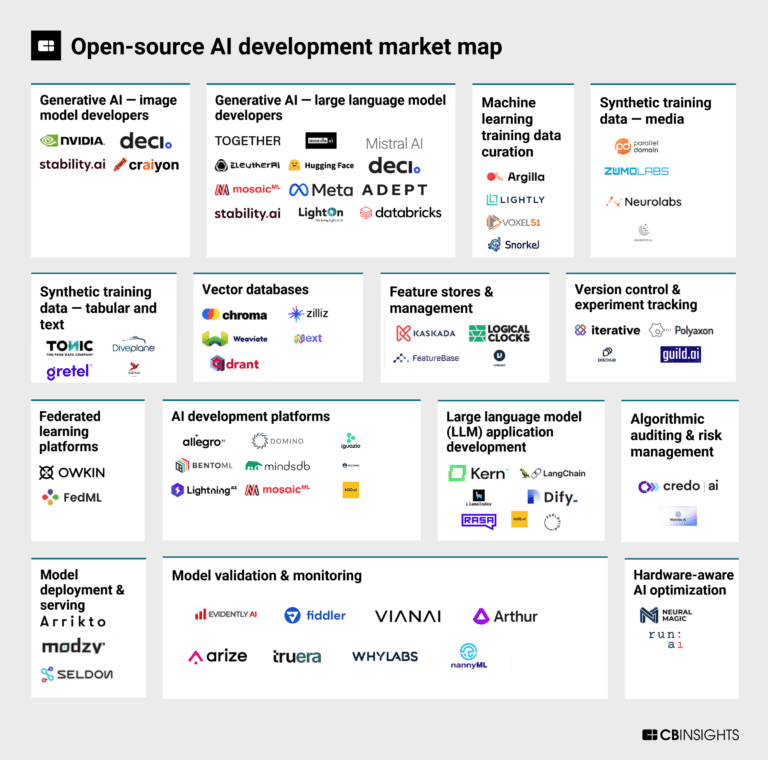

The open-source AI development market map

Sep 29, 2023

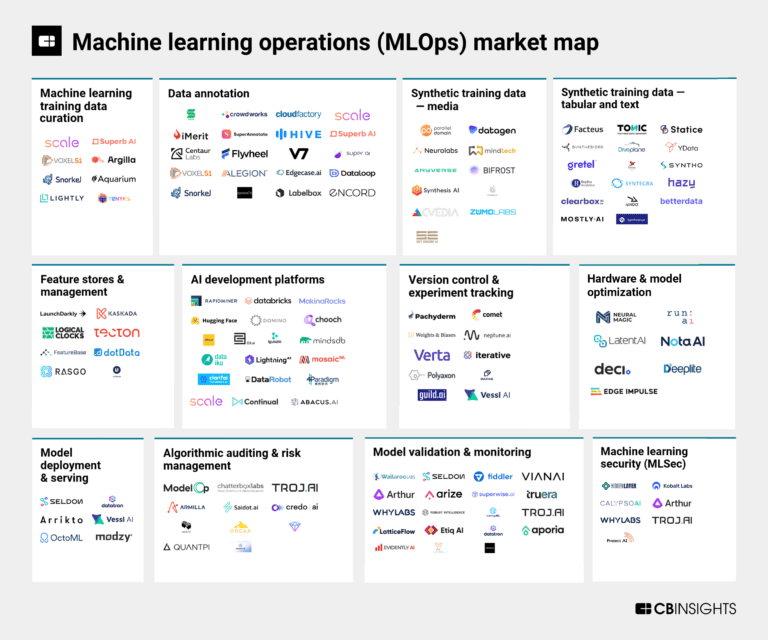

The machine learning operations (MLOps) market map

Sep 6, 2023

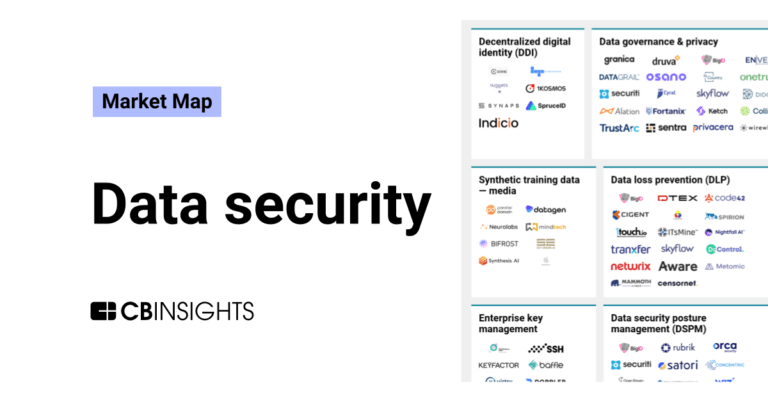

The data security market mapExpert Collections containing Gretel

Expert Collections are analyst-curated lists that highlight the companies you need to know in the most important technology spaces.

Gretel is included in 4 Expert Collections, including Cybersecurity.

Cybersecurity

11,252 items

These companies protect organizations from digital threats.

AI 100 (All Winners 2018-2025)

100 items

Generative AI

2,793 items

Companies working on generative AI applications and infrastructure.

Artificial Intelligence

10,195 items

Gretel Patents

Gretel has filed 1 patent.

Application Date | Grant Date | Title | Related Topics | Status |

|---|---|---|---|---|

7/31/2014 | 1/3/2017 | Information systems, Security technology, Access control, Computer security, Surveillance | Grant |

Application Date | 7/31/2014 |

|---|---|

Grant Date | 1/3/2017 |

Title | |

Related Topics | Information systems, Security technology, Access control, Computer security, Surveillance |

Status | Grant |

Latest Gretel News

Jul 8, 2025

Test Data Management Meets Sustainability The Intersection of Test Data Management and Sustainability ) Sustainability—which means „meeting the needs of the present without compromising the ability of future generations to meet their own needs“[2] - applies directly to software testing. Large-scale software testing consumes significant resources, and without efficient management, it leads to wasted storage, increased energy consumption, and unnecessary duplication of data. The sustainability dimensions in software testing include: Environmental Sustainability: Reducing energy consumption through optimized storage and data lifecycle management. Economic Sustainability: Reducing costs through automated test execution and maintenance. Social Sustainability: Ensuring ethical data use, employee well-being, and social responsibility. Technical Sustainability: Using long-term test automation solutions that evolve with technological advancements. Common Challenges: Over-provisioning: Businesses give test environments excessive storage, the majority of which is never utilized. The result is a waste of energy and higher hardware demand. Data Waste: Ineffective data-aging procedures result in the buildup of test data that is redundant, irrelevant, or out-of-date, which clogs storage systems and increases maintenance costs. Manual Processes: In the absence of automation, test data generation procedures are not always consistent, which can result in inefficient replication of previously produced content. Best Practices for Sustainable Test Data Management Smart Generation of Test Data Synthetic test data: Synthetic test data can be used rather than sensitive production data. This is privacy-compliant and even more resource-efficient. Various synthetic data generation tools exist that create quite realistic datasets for specific testing scenarios without exposing real user data. Examples of such tools include: MOSTLY AI: Known for generating highly accurate synthetic data that preserves the statistical properties of the original data. Tonic.ai: Offers solutions for generating realistic, de-identified test data. Gretel.ai: Provides a platform and APIs for creating privacy-preserving synthetic data. Synthetic Data Vault (SDV): An open-source library for generating synthetic data for various data types. Needs-Based Generation: Test data is generated precisely for the needs of the test scenarios without generating and storing any data that may not be required. Identifying key test scenarios and aligning data generation efforts with them reduces overhead. Data Masking: When production-like data is needed, anonymization or pseudonymization ensures that data privacy is maintained. Masked data still retains the core characteristics necessary for testing while removing sensitive information. Efficient Usage of Storage Data compression: Compressed test data requires less storage and reduces data transfer times. Modern compression algorithms can significantly reduce file sizes without compromising data integrity. Examples of lossless compression algorithms commonly used in data storage include LZ77 and LZ78 (which are the basis for formats like ZIP and GZIP) and Run-Length Encoding (RLE), which is effective for data with repeating sequences. Techniques like dictionary encoding and columnar storage, often implemented in modern databases, also contribute to efficient compression by reducing redundancy within data sets. Deduplication: Duplicates in data should be identified and removed to save storage space. Duplication tools study stored data for finding identical segments so that no duplicate copies of data are created. Intelligent Archiving: If test results are no longer relevant, they can be archived or removed. Such archiving solutions, integrated with data lifecycle management frameworks, can automate this process and ensure compliance with data retention policies and industry regulations. Automation and Tools This is how automated TDM solutions can enable better utilization of resources. Test data generation and management tools can optimize processes, reduce manual effort, and ensure consistency of data. For example, AI-powered solutions can predict data needs and adjust storage allocation dynamically for even higher efficiency. Regulatory and Ethical Considerations TDM practices should be aligned with regulatory and ethical requirements. Data privacy laws like GDPR and HIPAA have put immense pressure on handling sensitive information with care. Integrating compliance into the workflow of TDM will prevent penalties while fostering sustainability. GDPR (General Data Protection Regulation): A regulation enforced in the European Union that governs data privacy and security. It mandates strict guidelines on collecting, storing, and processing personal data, ensuring that organizations maintain transparency and accountability. Under GDPR, individuals have rights over their data, including the right to access, rectify, and erase their personal information. [5] HIPAA (Health Insurance Portability and Accountability Act): An U.S. law that establishes national standards for protecting sensitive patient health information. HIPAA ensures that healthcare providers, insurers, and business associates maintain the confidentiality and security of medical data. It requires safeguards for electronic health records and imposes strict penalties for breaches. [6] In addition, test data creation and usage should be based on ethical principles such as fairness, transparency, and accountability. Organizations should avoid letting synthetic data introduce bias or inaccuracies that may affect the performance of the software. Sustainability Data Strategy: Key Insights A well-defined sustainability data strategy involves 3: Factual Data, Not Estimates: Relying on precise, audit-ready sustainability data rather than approximations ensures accuracy and reliability. Many organizations currently rely on sector averages, which are often imprecise and fail to provide actionable insights for reducing environmental impact. Transparency in Net Zero Motives: Organizations must disclose actual emissions and offsets purchased, aligning financial data with sustainability goals. The rising costs of carbon offsets make it crucial to provide clear, data-backed sustainability strategies. Operational Improvements and Long-Term Value Creation: High-quality sustainability data helps reduce carbon emissions, minimize back-office expenses, and improve decision-making processes. By integrating sustainability metrics with financial data, companies can track the effectiveness of green initiatives. Audit-Ready and Proficient Data: The increasing scrutiny on ESG data means organizations must ensure their sustainability reporting aligns with regulatory expectations and can withstand audits. Consistent and structured data management supports compliance and enhances credibility. Organizations must also master challenges in ESG data reporting, standardization, and regulatory compliance to enhance sustainability initiatives. ESG (Environmental, Social, and Governance) refers to a set of criteria used by organizations, investors, and regulators to assess a company‘s commitment to sustainability and ethical business practices. ESG data plays a crucial role in sustainability reporting and decision-making. Companies are expected to disclose their environmental impact, social responsibility efforts, and governance practices. However, challenges such as data standardization, regulatory compliance, and audit readiness make ESG reporting complex. A robust sustainability data strategy ensures accurate, transparent, and audit-ready ESG reporting, helping organizations align their sustainability initiatives with business goals. [7] Sustainable Software Testing in Practice Software testing has a significant impact on sustainability, with long-term benefits often overlooked[2]. The key aspects of sustainable software testing include: Minimizing Environmental Impact: Sustainable software testing reduces unnecessary computing resource consumption, leading to lower energy use and reduced carbon footprints. This includes optimizing test execution schedules to run during off-peak hours and leveraging cloud environments with renewable energy sources. Enhancing Economic Sustainability: Test automation reduces operational costs by streamlining repetitive testing tasks. Companies implementing automated, energy-efficient testing strategies benefit from reduced hardware and cloud expenditures. Improving Technical Sustainability: Implementing scalable and maintainable test frameworks ensures that software testing processes remain efficient and adaptable to future technological advancements. Encouraging Knowledge-Sharing and Collaboration: Sustainable testing practices involve knowledge transfer and continuous improvements, helping organizations develop resilient and future-ready test strategies. Benefits of Sustainable TDM The advantages of a sustainable approach to TDM include the following: Cost Savings: Reduced storage needs lower hardware and cloud costs. Optimized test data usage decreases infrastructure expenses while improving efficiency. Eco-Friendliness: Optimized data management results in lower energy consumption and a reduced carbon footprint. Green IT initiatives such as efficient data storage and retrieval mechanisms contribute to environmental sustainability. Data Security: Synthetic and well-managed test data minimizes the risk of data privacy breaches. Organizations can maintain compliance with data protection regulations while reducing redundant storage. Operational Efficiency: Streamlined TDM processes improve overall development efficiency, allowing teams to focus on innovation. Sustainable TDM supports faster software release cycles and enhances quality assurance practices. The Future of Test Data Management in a Greener IT Landscape Emerging trends such as green computing and carbon-neutral cloud services are reshaping the IT industry. Companies are increasingly adopting environmentally friendly technologies and practices, including renewable energy-powered data centers and energy-efficient storage solutions. AI and machine learning developments really drive innovation in the field of TDM. These technologies enable predictive analytics, real-time optimization, and adaptive resource allocation, adding to sustainability. For example, AI can find patterns in test data usage and suggest optimizations that reduce waste. However, it is important to acknowledge that these technologies, particularly in their training and operation, can also be a source of significant energy consumption. Conclusion Test data management and sustainability go hand in hand. Optimizing TDM processes enables companies to save resources and, at the same time, make their contribution to environmental protection. This is the ideal moment to adopt more sustainable solutions and take a new look at your current procedures. Seize this opportunity to implement efficient generation and management strategies for test data now—for a greener future in IT. Sustainable TDM practices will bring balance to the needs of an organization between sustainability and environmental responsibility. It all begins with a few simple, achievable actions that add up to a significant impact. We should together build up the path towards sustainability for the IT industry and beyond. Quellen: [1] Dror Etzion and J. Alberto Aragon-Correa, „Big Data, Management, and Sustainability: Strategic Opportunities Ahead,“ May 10, 2016, Volume 29, Issue 2. [2] Beer, A., Felderer, M., Lorey, T., & Mohacsi, S. (2021). „Sustainability in the Test Process.“ 1st International Workshop on the Body of Knowledge for Software Sustainability (BoKSS), IEEE ICSE Conference. [3] Clusters Media Technology. (2022). „Sustainability Data Strategy: Top Key Components for a Positive Impact.“ [4] Jim Soos, Planckton Data Technologies. (2023). „Sustainability Data Management.“ [5] Official text of the General Data Protection Regulation (GDPR): https://gdpr-info.eu/ [6] More information about the Health Insurance Portability and Accountability Act (HIPAA): https://www.hhs.gov/... [7] Learn more about Environmental, Social, and Governance (ESG): https://www.investopedia.com/... Story teilen:

Gretel Frequently Asked Questions (FAQ)

When was Gretel founded?

Gretel was founded in 2020.

Where is Gretel's headquarters?

Gretel's headquarters is located at 8910 University Center Lane, San Diego.

What is Gretel's latest funding round?

Gretel's latest funding round is Acquired.

How much did Gretel raise?

Gretel raised a total of $65.5M.

Who are the investors of Gretel?

Investors of Gretel include NVIDIA, Google Cloud Next, Microsoft for Startups Pegasus Program, Greylock Partners, Moonshots Capital and 5 more.

Who are Gretel's competitors?

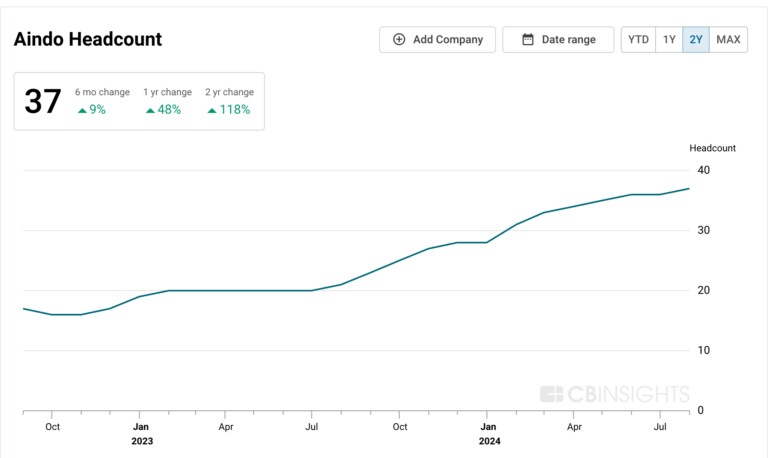

Competitors of Gretel include GenRocket, Hazy, Rockfish Data, Aindo, Ydata and 7 more.

What products does Gretel offer?

Gretel's products include Gretel Synthetics and 2 more.

Loading...

Compare Gretel to Competitors

MOSTLY AI specializes in synthetic data generation for various business sectors and focuses on creating high-fidelity synthetic datasets. The company offers a platform that enables the generation, synthesis, and creation of data, ensuring privacy and compliance with regulations. MOSTLY AI's solutions cater to needs such as AI/ML development, data sharing, testing and QA, and self-service analytics, providing tools for data democratization and insights through a natural language interface. It was founded in 2017 and is based in Vienna, Austria.

Tonic AI specializes in data privacy and synthetic data generation within the software development sector. It offers a suite of tools for de-identifying and synthesizing realistic test data, ensuring compliance with data privacy regulations while maintaining data utility for development and testing. Tonic AI primarily serves sectors that require rigorous data privacy measures, such as healthcare, financial services, and e-commerce. The company was founded in 2018 and is based in San Francisco, California.

YData specializes in enhancing data quality for data science and artificial intelligence applications within the technology sector. The company offers a platform for synthetic data generation, data quality profiling, and data-centric AI to improve AI model performance and ensure data privacy. It primarily serves sectors such as financial services, telecommunications, healthcare, and retail. The company was founded in 2019 and is based in Seattle, Washington.

Dedomena operates in the fields of artificial intelligence and data science, focusing on synthetic data generation, data anonymization, and AI model enhancement. The company provides services that help businesses handle GDPR-compliant data. Dedomena's platform serves various industries, including banking and healthcare, by providing functionalities related to data management. It was founded in 2021 and is based in Madrid, Spain.

Synthesized specializes in API-driven data generation and automation for data-driven organizations. The company offers a platform that enables the creation, validation, and sharing data for analysis, model training, and software testing, with a focus on machine learning and quality assurance teams. Synthesized's solutions cater to various sectors, including software development, data analysis, and regulatory compliance. It was founded in 2018 and is based in London, United Kingdom.

Clearbox AI specializes in synthetic data solutions within the AI and analytics sectors. The company offers services that generate synthetic data to enhance privacy compliance, improve data quality, and support automated testing for AI projects. Clearbox AI's technology is sector-agnostic, catering to various industries such as finance, healthcare, retail, energy, and mobility. It was founded in 2019 and is based in Turin, Italy.

Loading...